New Research Comparing Different Altmetrics Tools

The term altmetrics means different things to different people. At Plum Analytics it means any metrics about any type of research output. It is common knowledge that the ubiquitous use of the Internet has made sharing everything, including research, simple. With all of the different ways to share output come different ways to understand the impact and stories of research. In other words, since research is no longer limited to print articles in print journals, we have new ways of looking at it to discover the stories and impact of research. This is where altmetrics plays a role.

Altmetrics challenge old ways of thinking; with this challenge come research opportunities. Recently, a group of researchers from Leibniz Institute for Science and Mathematics Education, University of St. Gallen, Leibniz Information Centre for Economics, Institute for the World Economy, and Leibniz Information Centre for Life Sciences studied the different altmetrics tools available (Jobmann et al., 2014).

The title of their report is “Altmetrics for large, multidisciplinary research groups: Comparison of current tools,” and it can be found here. To date, most of the altmetrics research has compared altmetrics to citation counts. What is interesting about this new research article is that it acknowledges that not all altmetrics are created equal and it compares the altmetrics providers to each other.

Here is the abstract:

Most altmetric studies compare how often a publication has been cited or mentioned on the Web. Yet, a closer look at altmetric analyses reveals that the altmetric tools employed and the social media platforms considered may have a significant effect on the available information and ensuing interpretation. Therefore, it is indicated to investigate and compare the various tools currently available for altmetric analyses and the social media platforms they draw upon. This paper will present results from a comparative altmetric analysis conducted employing four well-established altmetric services based on a broad, multidisciplinary sample of scientific publications. Our study reveals that for several data sources the coverage of findable publications on social media platforms and metric counts (impact) can vary across altmetric data providers. (Jobmann et al., 2014)

Background

This study looked at research articles from a variety of disciplines represented by the five institutes of the Leibniz Association. These are:

- Humanities and Educational Research

- Social Sciences/Spatial Research

- Life Sciences

- Natural Sciences/Engineering

- Environmental Sciences

The study looked at four altmetrics providers:

They evaluated the metrics from these four providers across those five disciplines to determine how the providers compared with each other.

The researchers selected research articles published in 2011 and 2012 from the five Leibniz Association research institutes. After some manual validation and duplicate removal, the study covered 1,740 DOIs. The reason they selected to use DOIs is because all four altmetrics services support them.

Using the Tools

The article also commented on the ease of use of the altmetrics tools they used to conduct the research. The researchers had a variety of problems trying to use ImpactStory, including the unreliability of the delete functionality and capacity restrictions in uploading the DOIs. Webometric Analyst required a Microsoft Azure Account key, and it only ran on Microsoft systems. It, too, had capacity problems in that it restricts data download from Mendeley to 500 items per hour.

With respect to Altmetric Explorer, the researchers commented:

Altmetric Explorer itself can only process 50 identifiers at a time. Identifiers have to be numeric, thus, the link format, e.g., http://dx.doi.org, has to be removed from the data before analyses. If searching for articles with DOIs altmetric data has to be immediately exported, because the results cannot be saved. Only the search process with the respective settings can be saved under /my Workflow/. Therefore, Altmetric Explorer is only suitable for an immediate analysis with real-time data. (Jobmann et al., 2014)

The researchers did not have problems with Plum Analytics:

Plum Analytics offers interested institutions a free trial account. In addition, trial users can refer to an EBSCO customer service representative in case of questions. Plum allows for the upload of an extensive list of DOIs and generates downloadable search results in various data formats. No difficulties were encountered in the use of the service. (Jobmann et al., 2014)

Results

The researchers found a lot of variability for what kind of metrics different services covered, with Plum Analytics covering 26 different metrics and ImpactStory and Altmetrics Explorer each covering 12. Webometric Analyst has two versions: one that gets all of their data from Mendeley, and one that gets all of their data from Altmetric Explorer – and these are mutually exclusive. The metrics from the version of Webometric Analyst that gets their data from Altmetric Explorer are identical to that service. And, as one would expect, the metrics from the version of Webometric Analyst that gets their data from Mendeley, has only Mendeley metrics and no others. Because of these factors, Webometric Analyst is only included in the study to a limited degree.

The researchers described their results:

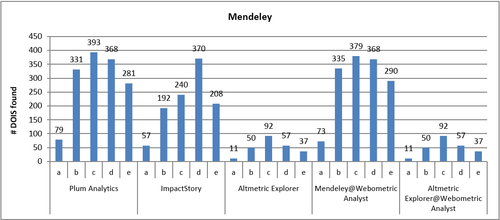

Plum Analytics (total of DOIs found: 1,452) and Webometric Analyst (1,445 DOIs) appear to provide the best coverage of Mendeley, followed by ImpactStory (1,067 DOIs) (see Figure 3). The Altmetric Explorer only identifies a fraction of the DOIs identified by the other services (247 DOIs). Interestingly, Plum Analytics and ImpactStory generate quite similar data for publications from Sections D (Mathematics, Natural Sciences, Engineering) and E (Environmental Sciences), while the other sections appear underrepresented by ImpactStory.

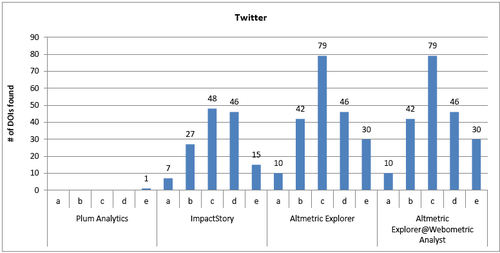

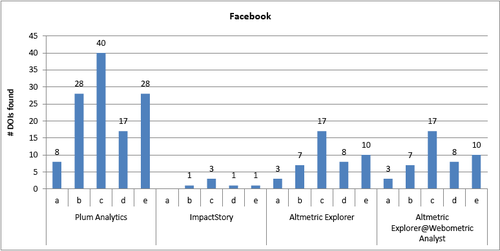

Twitter is best covered by Altmetric Explorer and ImpactStory, while Plum Analytics only generates data for one DOI (see Figure 4). Facebook, instead is best covered by Plum Analytics, with only occasional results by ImpactStory (see Figure 5). Wikipedia is equally covered by Plum Analytics and ImpactStory, but not represented by the other services. (Jobmann et al., 2014)

Figure 3. Coverage of DOIs on Mendeley for each section found by the data providers. The Leibniz sections are a) Humanities and Educational Research, b) Economics, Social Sciences, Spatial Research, c) Life Sciences, d) Mathematics, Natural Sciences, Engineering, and e) Environmental Sciences (n=1,717)

Figure 4. Coverage of DOIs on Twitter for each section found by the data providers. The Leibniz sections are a) Humanities and Educational Research, b) Economics, Social Sciences, Spatial Research, c) Life Sciences, d) Mathematics, Natural Sciences, Engineering, and e) Environmental Sciences (n=1,717).

The study notes that Plum Analytics draws their Twitter from Topsy.com. After the acquisition of Topsy by Apple, the Twitter feed to Plum Analytics was turned off. Fortunately, Plum Analytics has now replaced their Twitter data and have a robust Twitter solution. (Read more about it here.) Today, the Twitter results for Plum Analytics for this set of articles would look very different.

Figure 5. Coverage of DOIs on Facebook for each section found by the data providers. The Leibniz sections are a) Humanities and Educational Research, b) Economics, Social Sciences, Spatial Research, c) Life Sciences, d) Mathematics, Natural Sciences, Engineering, and e) Environmental Sciences (n=1,717).

Conclusion

One of the things the researchers discovered was that for the same data sources the coverage varies in ways that are not always easily understood.

First, the data providers fetch altmetric data from different social media platforms and with varying detailedness (e.g., Plum Analytics retrieves Facebook likes, comments, and shares, whereas ImpactStory only collects number of shares). Out of the studied data providers, Plum Analytics registers the most metrics for the most platforms. (Jobmann et al., 2014)

Even when all services appear to be using the same metrics from the same supplier, they can still vary.

Second, the data providers differ in the number of DOIs they find on the social media platforms. Especially in the case of Mendeley, Altmetric Explorer performs worst in retrieving readership information for DOIs…Third, the altmetric impact values provided by Plum Analytics, ImpactStory, Altmetric Explorer, and Webometric Analyst for the publications in the data set also considerably deviate. Oftentimes it is the Altmetric Explorer search which results in lower impact values. (Jobmann et al., 2014)

Third, the altmetric impact values provided by Plum Analytics, ImpactStory, Altmetric Explorer, and Webometric Analyst for the publications in the data set also considerably deviate. Oftentimes it is the Altmetric Explorer search which results in lower impact values.

We encourage you to read this article to see even more results and analysis.

References

Jobmann, A., Hoffmann, C.P., Künne, S., Peters, I., Schmitz, J. & Wollnik-Korn, G. (2014). Altmetrics for large, multidisciplinary research groups: Comparison of current tools. Bibliometrie – Praxis und Forschung, 3, http://www.bibliometrie-pf.de/article/viewFile/205/258 [Accessed on February 12, 2015]